How to Scrape Ecommerce Website: A Comprehensive Guide

Do you own an ecommerce business and want to overpower your competitors? The key to gaining this competitive edge lies in understanding the market and your competitor’s strategies. That is where learning how to scrape ecommerce websites could be your golden ticket to success.

In this guide, we explore the two major web scraping techniques and briefly discuss how to scrape ecommerce websites using both methods. And don’t worry if coding isn’t within your skillset, as one of these methods needs zero coding skills.

So keep reading to uncover the web scraping techniques and give your business a boost.

Is Web Scraping Profitable?

In the digital era, web scraping has become incredibly popular among businesses. By extracting vital data such as product prices, descriptions, and customer reviews, businesses gain critical insights into market trends, competitor strategies, and customer preferences.

This info enables them to stay ahead of the competition by making smart decisions. Whether for pricing strategies, product development, or market analysis, the data obtained through web scraping can be a goldmine for businesses. So it’s a no-brainer that web scraping helps businesses maximize their profits.

Web Scraping Techniques

Before diving into a comprehensive guide about how to scrape ecommerce websites, let’s start with a quick look at web scraping techniques.

Web scraping can be done in different ways, using various tools and techniques, such as programming languages, frameworks, libraries, databases, and editors. However, Web scraping falls into two main categories: manual web scraping and automated web scraping.

Manual web scraping is the technique of scraping web pages using a programming language, such as Python, which requires coding skills and knowledge. On the other hand, automated web scraping is the technique of scraping web pages using a software tool, such as Bardeen, which does not require coding skills and knowledge.

Let’s explore these two web scraping techniques in a little more detail.

Manual Web Scraping (Uses programming language like Python)

As mentioned earlier, manual web scraping involves writing code to extract data from websites. This technique typically involves using a programming language like Python, which is popular for its powerful libraries, such as BeautifulSoup and Scrapy. These libraries help in parsing and navigating the structure of web pages.

Manual web scraping provides a high level of customization and control over the data extraction process. It allows for precise targeting of data and the ability to handle complex web structures or data formats. However, it requires programming skills and a good understanding of web technologies.

Automated Web Scraping (Uses a tool to scrape the web)

Automated web scraping is especially helpful for users without a programming background, as it provides a simplified way to scrape an entire website. It involves the use of specialized software designed to navigate and scrape data from websites based on predefined parameters.

Automated web scraping is particularly useful for tasks like scraping data from e-commerce websites or collecting information across multiple web pages. It simplifies the process of data extraction, making it accessible to a wider audience who may need to scrape e-commerce websites but lack the technical skills for manual scraping.

While automated web scraping is convenient, fast, and simple, it may not offer the same level of control and customization as manual web scraping.

Scrape Ecommerce Website Manually

Now that you are familiar with web scraping techniques, let’s take a step further and show you how to scrape ecommerce websites. For this guide, we will be using Python.

Step#1: Install Python

First things first, install Python. It’s better to do it from its official website. The latest version of Python is 3.12.1 as of December 2023, but you can download older versions too.

Step#2: Install Required Libraries

To scrape ecommerce websites, libraries like BeautifulSoup or Scrapy is ideal. BeautifulSoup is great for simple tasks and small-scale scraping, while Scrapy is more suitable for large-scale and complex scraping operations.

In this tutorial, we’re using BeautifulSoup. We will also be using the requests library that will fetch the data from the given URL. Once the data is fetched, we will use the BeautifulSoup library to parse the data and extract our desired information from it.

Open the command prompt and simply write

Pandas is a data manipulation Python library offering a data structure known as a DataFrame. We will need it to export the collected data into CSV file format.

Step#3: Importing Libraries

Now that the libraries are available, it’s time to get to coding. Open your code editor and import the libraries we just installed.

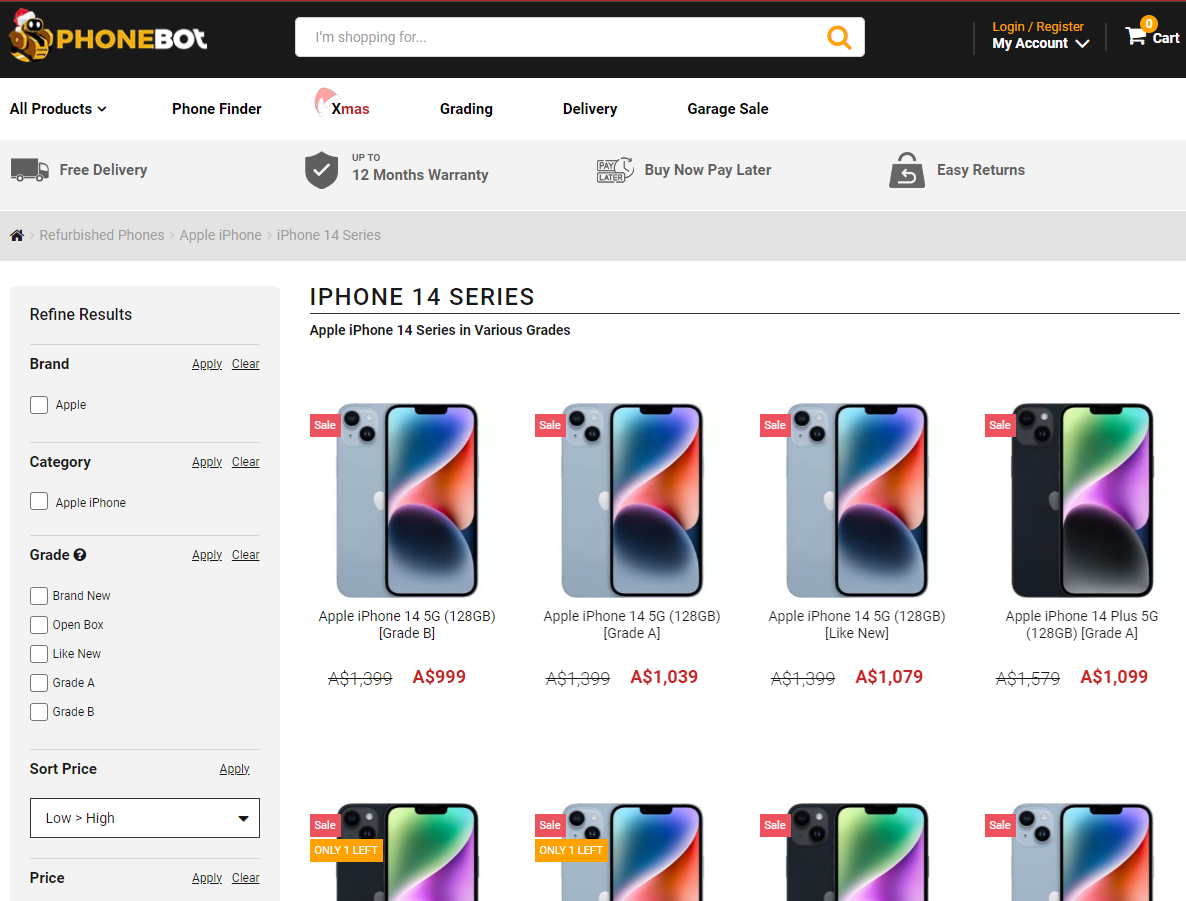

Step#4: Identifying the Target Website

Determine which ecommerce site is hosting your required data. The required data could be text, images, links, or any specific information embedded in the webpage. To demonstrate, we will extract product data from the iPhone 14 category page of an ecommerce refurbished phone seller’s site.

Step #5: Request Content Retrieval

Now, we’ll send a request to the hosting server to access the contents of this page.

The output of the requests.get() will be stored in the variable url.

Now, we will parse the url variable to get the target data.

url.text extracts the HTML content of the webpage as a string. lxml specifies the parser that BeautifulSoup should use to parse the HTML. lxml is a very efficient and fast parsing library for Python.

Step#6: Inspect The Webpage for Required Elements

Let’s find out the tags our required lies in. Just right-click anywhere on the page and select inspect.

As you can see, the details of the phone are inside a <div> tag with a class pros-cont-wrap detail-prodt. The image, title, and old and new prices are also visible.

Step#7: Start Scraping the Details

Let’s say we want the titles and old and new prices of the phones on the page. We can simply create a for loop and get the data of all the phones.

Scrape Ecommerce Website Automatically

Don’t have coding skills to try the manual method? No worries! We’ll show you how to scrape e-commerce website efficiently with automated web scraping tools. These tools are designed for ease of use and are ideal for those who aren't familiar with programming. With automated scraping, you simply choose the data you want to extract, and the tool does the rest for you.

Let's see how it's done in more detail.

Step#1: Pick A Scraping Tool

Choose a reputable no-code tool like Bardeen or Octoparse based on your scraping needs. Badreen typically works as a browser extension, so get it on board your web browser.

Step#2: Setting Up The Tool

Install the free Bardeen’s Chrome extension from here and configure the tool according to your requirements. Badreen has a pre-built scraper model. Either use that or make your own.

Step#3: Start Scraping Data From Target Sites

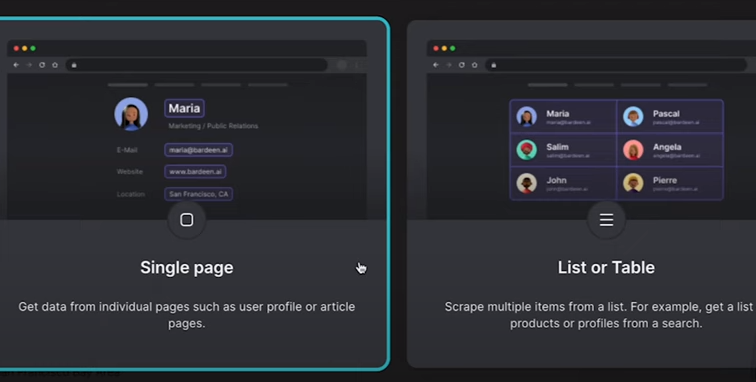

Simply open the target webpage on your browser and click on the Bardeen extension icon in your browser to open it. Badreen offers the following two extraction options, choose the one you need.

Now select the specific data you wish to scrape. This could be product names, prices, descriptions, images, etc. You usually do this by clicking on the data elements on the webpage, and Bardeen will recognize and mark these for scraping.

Step#4: Running The Scraping Process:

Execute the scraping action. Bardeen will collect the data from the website based on your specifications.

Step#5: Exporting The Data:

After extraction is done, Badreen lets you view the data in Google Sheets or download it as CSV or other file formats.

Use AdsPower for Safe and Secure Web Scraping

Both automated and manual web scrapers face the risk of being blocked by websites that use anti-scraping measures. Both these methods need an added layer of protection to portray their activities as human behavior, not robotic. This is where AdsPower jumps in, especially when considering how to scrape e-commerce websites.

AdsPower browser ensures a seamless web scraping experience by effectively dodging anti-scraping challenges. So, whether you're engaged in manual web scraping or using automated tools, AdsPower enhances your ability to scrape entire websites without detection. Its scalability and multi-profile features also accelerate the data extraction process.

Let’s get Web Scraping!

Learning how to scrape ecommerce websites can revolutionize your business. If you have some programming know-how or the budget to hire a web scraping specialist, then you can bear the fruits of manual web scraping techniques and go to any limit.

But if coding isn’t your piece of cake, then automated Web Scraping tools are there to ease the process and scrape any website for you. Now that you have learned both methods in our guide, you're set to take your ecommerce business to new heights.

People Also Read

- How to Transfer Data from One ChatGPT Account to Another

How to Transfer Data from One ChatGPT Account to Another

Learn how to transfer ChatGPT conversation history between accounts, what’s officially supported, and practical ways to manage your chats.

- Match.com Login Troubleshooting: Fix Access Issues with a Fingerprint Browser

Match.com Login Troubleshooting: Fix Access Issues with a Fingerprint Browser

If Match.com keeps locking you out or rejecting your email, this guide explains what's happening and how to fix it with a fingerprint browser.

- How to Switch Accounts on Chrome (Mobile & Desktop)

How to Switch Accounts on Chrome (Mobile & Desktop)

Learn how to switch accounts on Chrome for desktop and mobile. Avoid data mix-ups, manage multiple Google accounts safely, and use profiles for separa

- Black Friday Anti-Ban Checklist: Protect Your Ads, Payments, and Ecommerce Accounts

Black Friday Anti-Ban Checklist: Protect Your Ads, Payments, and Ecommerce Accounts

Protect your ads, payment gateways, and ecommerce accounts this Black Friday with a proven anti-ban checklist and AdsPower strategies to avoid flags

- The Solo Marketer's Black Friday Superpower: Scaling Like an Agency with AdsPower

The Solo Marketer's Black Friday Superpower: Scaling Like an Agency with AdsPower

Solo marketer for Black Friday? Learn how to scale your ads, safely manage multiple Facebook & TikTok accounts, and automate tasks with AdsPower.