How to Use Proxies for Web Scraping Without Getting Blocked

Take a Quick Look

Rationally and effectively using proxies will help your web scraping without getting blocked. Discover tips to maintain anonymity and scrape data seamlessly. Ready to optimize your scraping process? Explore our guide and start scraping smarter today!

Web Scraping is a technique used to extract data from web pages. It works by sending requests to target websites similar to how a browser does. This enables the retrieval of various information such as text, images, and links.

Web scraping has several applications:

-

E-commerce: Facilitates price monitoring, helping businesses and consumers make better purchasing decisions and set competitive prices.

-

News: Enables aggregation from various sources, providing a comprehensive news overview on one platform.

-

Lead Generation: Aids businesses in finding potential customers by extracting relevant data, and enhancing marketing effectiveness.

-

SEO: Used for monitoring search engine result pages and optimizing website content and strategies.

-

Finance: In applications like Mint (US) and Bankin' (Europe), it allows bank account aggregation for streamlined personal finance management.

-

Research: Helps individuals and researchers create datasets that are otherwise inaccessible, promoting academic research and data-driven projects.

But many are tired of having web scraping projects blocked or blacklisted. In fact, following as many best practices as possible is all it takes to avoid an unsuccessful web data extraction process.

The trick to performing successful web scraping is to avoid being treated like a robot and bypassing the site's anti-bot systems. This is because the vast majority of websites do not welcome data scraping and will make efforts to block the process.

This post will help you understand the various pieces of information that websites use to prevent information from being crawled, and what strategies you can implement to successfully overcome these anti-bot barriers.

Choose the Right Proxies

The primary way websites detect web scrapers is by checking their IP addresses and tracking how they behave.

If the server detects strange behaviour or an unlikely frequency of requests, etc. from a user, the server blocks that IP address from accessing the site again.

This is where the use of a proxy server comes in. Proxies allow you to use different IP addresses to make it appear that your requests are coming from all over the world. This helps to avoid detection and blocking.

In order to avoid sending all requests from the same IP address, you are recommended to use an IP rotation service, which hides the real IP when crawling the data.

Also, to increase the success rate one should choose the right proxy. For example, for more advanced sites with anti-crawl mechanisms, residential proxies are more appropriate.

It's worth noting that the quality of your proxy server can greatly affect the success of your data crawl, so it's worth investing in a good proxy server.

IPOasis's proxies can help businesses perform web scraping without detection or being blocked. Their proxies overcome geo-restrictions, rotate IP addresses, and perform scraping at scale without disrupting the target website's normal operation.

With over 80 million real residential IPs from 195 countries, IPOasis's proxies scraping proxies provide the flexibility and scalability needed for any web scraping project. You'll never have to worry about getting blocked again while collecting public web data.

Additionally, IPOasis's proxies perform at peak times and large scales, ensuring that customers always have the proxies they need at any time. Avoid being flagged by using unlimited sessions and changing IP locations as often as needed. Trust in the reliability and effectiveness of scraping proxies for data collection needs.

Manage Request Rates

-

Slow down requests: Because you need to mimic the behavior of normal users, avoid sending a large number of requests in a very short period of time. No real user browses a website this way, and this behaviour is easily detected by anti-crawling techniques. For example, if human users typically wait a few seconds between page loads, then you should space out requests accordingly. A good rule of thumb is to limit the number of requests per minute depending on the nature of the site.

-

Randomize the request interval: introduce random delays between requests, ranging from a few seconds to a few minutes to increase randomness to avoid detection. This will make your traffic patterns look more like those of real users, who may spend different amounts of time between actions on the site. You can use a programming function to generate random numbers within a range to set the time delay between requests.

Set Proper Headers

-

User-Agent String: The User-Agent header tells the site which browser and operating system the user is accessing. One way to flexibly apply this strategy to make your requests appear to come from different browsers and devices is to use multiple User-Agent strings. For example, you can rotate the User - Agent strings for Chrome on Windows, Firefox on Mac, and Safari on iOS.

-

The Referrer Header: The Referrer Header is a part of the HTTP header. It's used to identify the web page from which a request is initiated. In simple terms, when a browser navigates from one web page to another, it's used to identify the web page from which the request was initiated. browser navigates from one web page (the source page) to another web page (the destination page) and sends a request, the Referrer Header tells the destination page where the request came from. Setting up a valid Referrer can make your requests look more legitimate. If you are searching for a product page on an e-commerce site, you can set the Referrer to link to the category page of the product page. These strategies help mask your crawling activity and create more human interaction with the site, thus minimizing the chances of triggering anti-bot defenses.

Use a Headless Browser

A headless browser is a powerful tool for web scraping without triggering anti-bot systems. Unlike standard web scraping libraries, headless browsers like Puppeteer or Selenium replicate human browsing by loading complete web pages, including JavaScript and dynamic content.

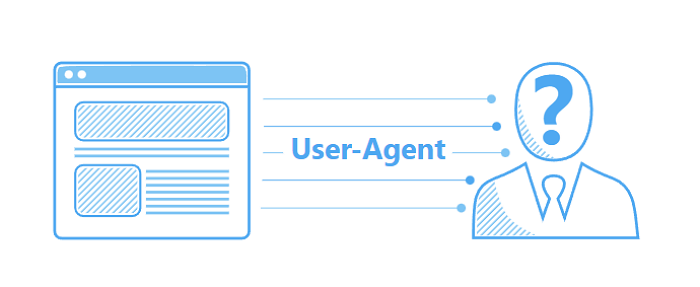

When paired with the AdsPower browser, your scraping process becomes even more efficient. AdsPower allows you to start headless modewith headless and API-key. With it, you can configure unique browser fingerprints and seamlessly integrate proxies, making it difficult for websites to detect automated activity. This combination provides enhanced anonymity and reduces the risk of getting blocked.

With AdsPower, you can simulate authentic browsing behavior while rotating IPs and managing multiple accounts effortlessly. This ensures smooth scraping, even on sites with strict anti-crawling mechanisms, making your web scraping projects relatively successful and secure.

With the practical ways above, you can scrape website data without getting blocked. Ready to enhance your web scraping strategy? Start implementing these best practices today and explore how AdsPower and IPOasis proxy can streamline your efforts.

Tip: How to Set Up IPOasis in AdsPower?

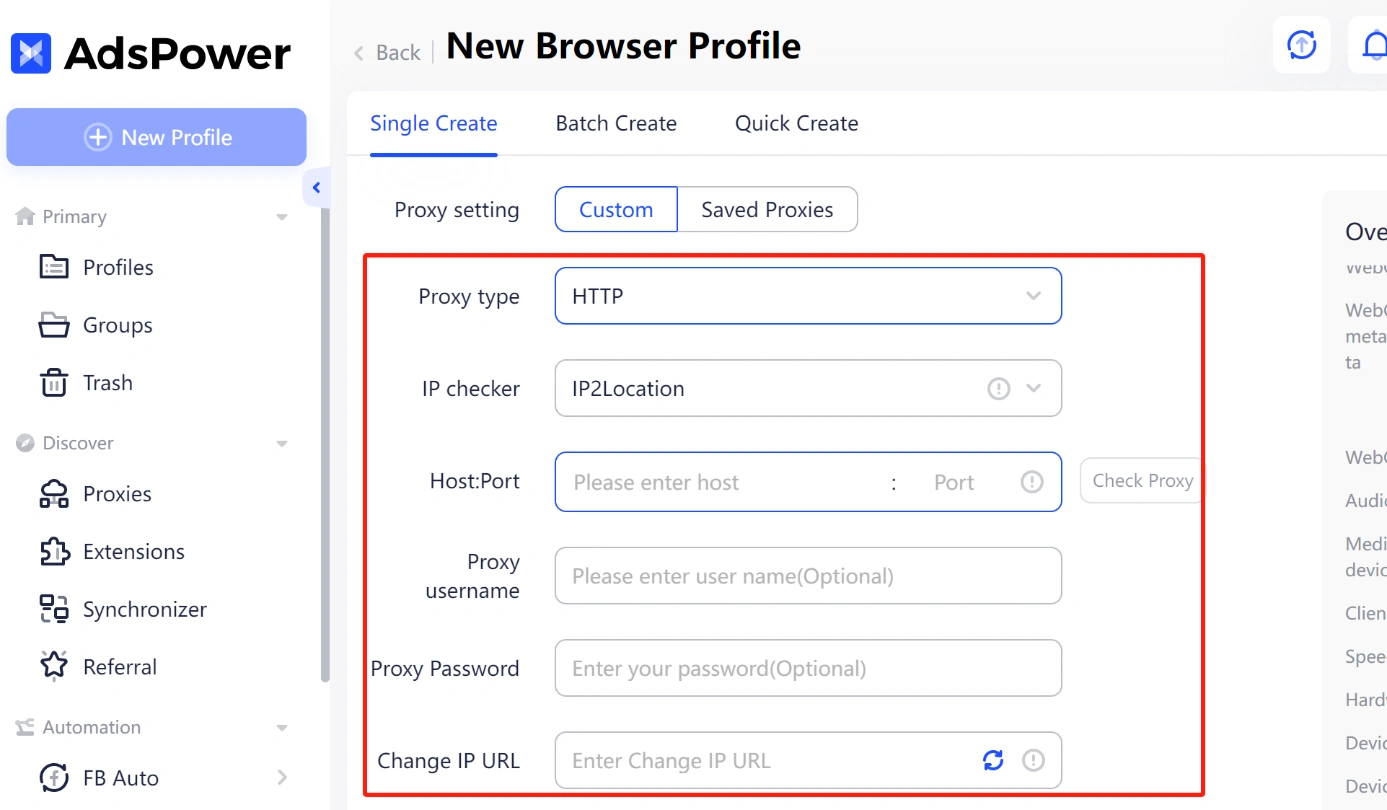

Step 1. Once you've downloaded and installed the AdsPower software, open it and click "New Profile" to create a fresh browser profile.

Step 2. On the Create New Profile screen, go to the Proxy section. Select the proxy type you want to use, such as HTTP or SOCKS5.

Step 3. After choosing the protocol, you usually don't need to adjust the IP Checker unless you have special preferences.

Then, go to your IPOasis dashboard to generate a proxy. Copy the details and paste the Host, Port, Username, and Password into AdsPower.

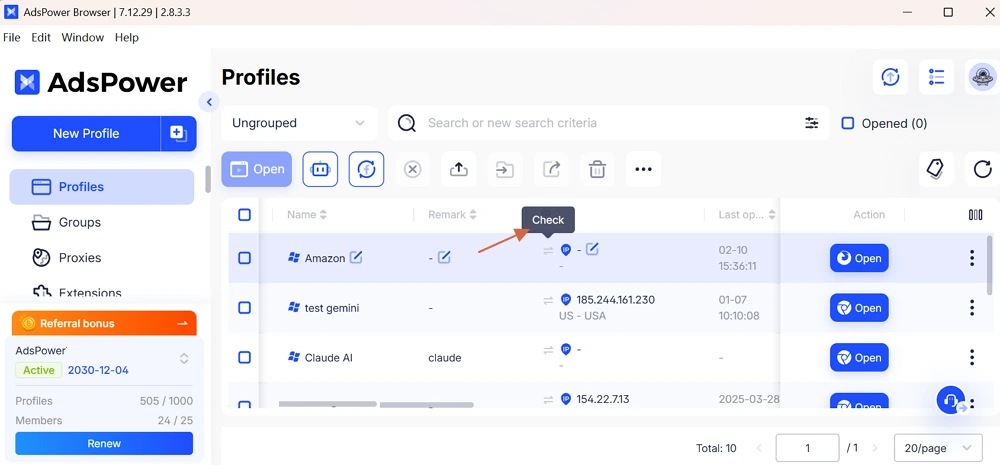

Step 4. Next, move to the proxy verification step and click "Check Proxy".

If the connection is successful, you'll see a green confirmation message below. After that, click "OK" to finish setting up the new profile.

People Also Read

- 7 Best Tools for Solving Captcha in 2026 Compared (Pros & Cons)

7 Best Tools for Solving Captcha in 2026 Compared (Pros & Cons)

CAPTCHA solvers 2026 compared for web scraping, speed, accuracy and pricing. Find the best tool for reCAPTCHA and Cloudflare challenges.

- Why Multi-Store Sellers Choose Doba + AdsPower for Scalable Growth

Why Multi-Store Sellers Choose Doba + AdsPower for Scalable Growth

Multi-store ecommerce made easy with Doba and AdsPower. Automate dropshipping, secure accounts, and scale safely on Shopify, Amazon, and eBay.

- CAPTCHA Solve Automation: A Comparison of CAPTCHA-Solving Services for Developers

CAPTCHA Solve Automation: A Comparison of CAPTCHA-Solving Services for Developers

Compare leading CAPTCHA solve services for automation. Review speed, accuracy, pricing, and APIs to choose the best CAPTCHA-solving solution.

- How Facebook Ad Account Rental Works: A Practical Guide for Growing Advertisers

How Facebook Ad Account Rental Works: A Practical Guide for Growing Advertisers

This guide explains Facebook ad account rental and how to scale safely with Meta-whitelisted accounts using GDT Agency and AdsPower.

- 7 Multi-Account Management Mistakes Affiliates Must Avoid in 2026

7 Multi-Account Management Mistakes Affiliates Must Avoid in 2026

Learn the 7 costly multi-account mistakes affiliates make and how to fix them. Manage accounts safely and scale profits with tools like AdsPower